Tag Archives: people analytics

People Analytics and the Scientific Method: Part I

Part I: Introduction

Overview

This article is the first in a three-part series explaining how the scientific method can be used to increase the effectiveness and profitability of people analytics in corporate environments. Part I offers a rationale for using the scientific method. Part II explains options for its deployment and Part III compares it to alternative people analytics practices.

Scientific Decision-Making in Organizations

Owing to the significant social and health risks associated with the release of a new drug, pharmaceutical companies are legally required to deploy a process – known as a clinical trial – to demonstrate, for example, that the proposed drug is fit for purpose and that it does not result in unmanageable side effects. Clinical trials, in turn, are based on the scientific method to establish causality beyond reasonable doubt (Figure 1). Many of today’s business analytics practices are in fact drawn from the scientific method.

However, the scientific method is not merely confined to manufacturing and R&D functions in business: marketing, procurement and finance functions have also benefited from its use for at least the last 20 years. For example, prior to investing in a campaign, analytical marketers use A/B testing – a technique based on the scientific method – to demonstrate which marketing campaign will deliver the greatest return on investment (Figure 2).

Yet when it comes to people analytics, only a few companies – like Unilever for example – use the scientific method to guide their investments in people programs (see Figure 3).

This is remarkable because many companies that are using the scientific method to improve their marketing, procurement and R&D decision-making choose not to use it when it comes to guiding their people investments; despite the fact they spend more on people than they do on marketing, procurement and R&D combined. Instead, these companies focus on entirely low-level people analytics techniques like HR reporting, visualization and dashboards to deliver their people analytics results.

Why are these companies not using the scientific method? There are at least two possible reasons. Firstly, many lower level people analytics approaches like HR reporting, dashboards, and visualization can be learned at post-conference workshops or on one-week courses. In contrast, the scientific method requires a significantly higher educational investment, typically a postgraduate research qualification. It may be that some HR functions, unlike their marketing and R&D counterparts, are not willing to make this investment.

Secondly, the scientific method is not widely publicized since it is not in the interests of technology companies to do so. This is because the scientific method requires significantly less technology to be effective than lower-level analytic approaches which rely on vast quantities of data and technology upon which to display it (rather than analyse it). Technology companies are therefore unlikely to promote high-level analytics techniques which would reduce their sales revenues. The result is that technology companies have “trained” the market to think about people analytics as a technology-driven discipline.

There is, of course, a place for lower-level analytics like dashboards, visualization and HR reporting in people analytics. In fact, they are essential for statutory reporting and business problem identification. Unlike the scientific method, however, they cannot establish causal links between people processes and desired business outcomes; nor can they be used to identify those people processes which require modification to enable the business to achieve its business objectives.

The result is that companies which focus purely on low-level people analytics are likely to be wasting a significant proportion of their human capital investments. The phrase low-level analytics deliver low-level returns has never been truer and is undoubtedly leading to Gartner’s Trough of Disillusionment in the people analytics industry.

The next article in this series will describe a methodology for implementing the scientific method.

The Future of Human Resources in the Age of Automation

By Max Blumberg, PhD

The full version of this article will be published in Winmark’s excellent C-Suite Report following my session there in November:

- Report: http://www.winmarkglobal.com/c-suite-report.html

- Session: http://www.winmarkglobal.com/wm/ViewEvents?function=Upcoming&network=SHR

Intro

We’ve all seen films where Artificial Intelligence replaces humans on-mass – and much debate swirls around just how much of this could ever be reality.

Meet Erica – perhaps the world’s most advanced, human like robot yet. She demonstrates that we may not be too far away from silver-screen-like AI workers.

https://www.youtube.com/watch?v=MaTfzYDZG8c

Yet when we talk of automation and robotics, we really shouldn’t be looking to tomorrow. Revolution has actually already occurred – and automation is, or at least, should be transforming the world of HR.

Putting it into context

Let’s consider a few statistics…

45 percent of work activities could be automated using already demonstrated technology.

Yet fewer than 5 percent of occupations can be entirely automated using current technology. However, about 60 percent of occupations could have 30 percent or more of their core activities automated.

The effects of this are already being felt.

Last year, Barclays announced an immediate future where 30,000 banking employees would lose their jobs to automation.

Whilst more recently, Apple’s manufacturer, Foxconn, replaced 60,000 factory workers with robots.

So, what does all of this mean for the world of HR?

In short, these drastic job market changes demand that HR professionals brace themselves. Here is what may be ahead when it comes to your roles…

Corporate culture: Just what does a culture where AI, robotics and humans intermingle look like? This is a particularly relevant question given the presumed hostility that many a worker may exhibit, given the increasing robotic replacements besides them.

Performance management: Performance based management – comparing one employee to the next, is the traditional means of assessing workforce progression.

As robotics increasingly enter our workforces, just how can a scale be defined between the two? This may well be a question that you’ll have to answer.

Employee relations: Technology has already disrupted markets – take Uber as the perfect example of a tidal wave of now unhappy workers take action against automation. The question as to how you harness advancement, whilst handling disgruntled employees may well prove a relevant one sooner, rather than later.

Motivation and rewards: Some experts reason that robotics will drive down wages – if this is realised then you’ll need to re-think your reward system. Just how can workers remain motivated when working alongside the automated tech that has taken bread off of their table?

Finally, some schools of thought might argue that you’ll potentially look after far fewer staff – whilst others contend that you’ll face departments that are in-flux as automation may empower productivity and staffing levels in other areas.

Are you sitting comfortably?

Perhaps the HR department itself is not immune to a certain level of replacement – as existing technology is already boasting advanced capabilities involved with Payroll, scheduling and Benefits arrangements. Yet until recently, such automation really only represented various tools – rather than a single robot that looms to threaten your job role.

Now, however, there’s Talla – a desktop chat bot that’s in the final stages of being prepared for office life – taking on tasks such as on-boarding, 24/7 workforce support and Tier 0 and Tier 1 support. And version two is already in the making.

Author

Max Blumberg, PhD is Founder of the Blumberg Partnership workforce and sales force analytics consultancy and Visiting Researcher at Goldsmiths, University of London.

Avoiding People Analytics Project Failure

Contents

1. Introduction

2. The Four-Block People Analytics Model

3. Sources of People Data

4. How to create Robust People Data Sets with Strong Correlations

- People Metrics Definition Process

- The People Metrics Definition Workshop for Operational Managers

- Restricted Range, Babies & Bathwater

- Technical Reasons Why Data May Not Hang Together

5. Take Away

1. Introduction

Making sense of people data is a struggle for many HR professionals. People analytics is only effective when data collection is focused on achieving a particular management objective – such as improving talent management processes, such as recruitment or retention, or to demonstrate HR’s contribution to the value/ROI of these processes. Despite this core concept of people analytics, many companies simply analyse the data nearest to hand – with the results being anything but insightful. Ultimately ad hoc data analysis invariably ends in project failure – delivering only a wasted budget and a belief that people analytics is just hype.

As most technical analysts will tell you, people analytics project failure usually boils down to just one thing: it simply means that hardly any significant correlations could be found in the data.

This article will help you harness people analytics, and avoid project failure, by presenting a systematic, cost-effective methodology for creating robust data sets that correlate. We will be focussing on two tools: the People Metrics Definition Process and People Metrics Definition Workshop for Operational Managers.

2. The Four-Block People Analytics Model

The People Metrics Definition Process methodology holds the premise that the primary – and perhaps only – reason for investing in people programmes – such as recruitment, development, succession planning, and compensation – is to deliver the workforce competencies required to drive the employee performance needed to achieve specific organisational objectives. Graphically, this can be expressed as follows:

| People Programmes | → | Workforce Competencies | → | Employee Performance | → | Organisational Objectives |

If any link in this chain – the Four-Block People Analytics Model – is broken, it means that investments in people programs are not delivering the organisational objectives aimed for.

The strength of a link between any two blocks in the model is referred to as the statistical correlation. When two blocks are correlated, a change in the values of one block can be predicted from a change in the values of the other. Let’s put this into a real world example – a training programme improves employees’ competency scores, which in turn results in a predictable, corresponding increase in employee performance ratings. This would show that competencies and employee performance are correlated. However, where there is a poor correlation between competencies and employee performance, then training programmes which increase competency scores will not result in increased employee performance. From a business perspective, this means that the training spend was a wasted investment.

3. Sources of People Data

The ways that you obtain the data used in each block is essential for establishing correlations.

1. Data sources for organisational objectives

Organisational objectives data reflects the extent to which business objectives are being achieved. This data is often expressed in financial terms, although there is an increasing drive towards the inclusion of cultural and environmental measures. A common and critical pitfall to avoid here is to consider workforce objectives (such as retention or engagement), rather than organisational objectives.

2. Data sources for employee Performance

Employee performance data is typically generated by managers in the form of a multidimensional ratings obtained during performance reviews. An employee performance rating should simply reflect the employee’s potential to contribute to organisational objectives. Note that the term potential is used deliberately to emphasise that employees who do not fully contribute to organisational objectives today, may do so in the future if they are properly trained and developed (assuming that they can be retained). A common error here is confusing employee performance measures with competency measures, which we define next.

3. Data sources for Competency

Competencies are observable employee behaviours hypothesized to drive the performance required to deliver organisational objectives. The word “hypothesized” is used to emphasise that the only way of knowing whether the company is investing in the right competencies is to measure their correlation with employee performance. If the correlation is low, it would be reasonable to assume that the company is working with the wrong competencies (or that there is a problem with performance ratings).

There are three problems usually associated with competency data. First, competency ratings are often based on generic organisation-wide competency frameworks. The resulting competencies are therefore typically so general as to be useless for any specific role.

Second, competency frameworks are often created by external consultancies lacking full insight into the real competencies required to drive employee performance in a particular sector and organisational culture. The only way to create robust competency frameworks is to obtain them from the operational personnel to whom the competencies apply. The People Metrics Definition Workshop for Operational Managers (which we explore later) will achieve this.

Finally, many companies confuse employee competencies with employee performance – presenting competencies as part of an employee’s performance rating. As noted above, competencies are merely hypothesized predictors of employee performance. They are not stand alone measures of employee performance. A real word example would be where good communication competency helps a salesperson to sell more, however a high communication competency score will not make up for missing a sales target.

4. Data sources for People Programmes

Programme data usually reflects the efficiency (as opposed to effectiveness) of talent management programmes such as the length of time it takes to fill a job role, the cost of delivering a training program, and so on. Programme data is usually sourced via the owner of the relevant people process.

For further ideas about people data measurement, visit www.valuingyourtalent.com

4. How to create robust people data sets with strong correlations

Here are four remedies for creating a Four-Block People Analytics model that actually correlates:

1. People Metrics Definition Process

The most common reason for poor correlations is using data not specifically generated with a defined purpose in mind. This is like trying to cook a sticky-rice stuffed duck without buying rice or a duck – it’s simply not going to end well.

The best way to cook up a successful people analytics project is to use a People Metrics Definition Process. This starts with the end in mind (namely by first defining the business objectives data) and then working backwards through the Four-Block People Analytics Model:

1. Organisational objective

First ask: “What organisational objective(s) need to be addressed?” Where possible, choose high profile objectives such as those which appear in the annual report (such as revenues, costs, productivity, environmental impact, and so on). Then narrow down this list to those metrics which, for example, reflect targets that are being missed. This approach ensures your people analytics has relevance.

2. Employee performance

Now consider how the employee performance required to achieve these objectives will be measured. This is discussed under section three – Restricted Range, Babies and Bathwater.

3. Competencies

Next define the competencies likely to be needed to drive this employee performance. Note that global competencies usually exhibit far lower correlations than role-specific competencies. Competency definition is discussed below, under the heading The People Metrics Definition Workshop for Operational Managers.

4. People Programmes

Finally consider the kinds of people programmes that will be required to deliver these competencies and also how to measure the efficiency of these programmes. Bear in mind that a people programme is only as effective as the competency metrics that it produces.

Companies performing the above steps in any other order should not be surprised if they end up with poor correlations between their people programmes, competencies, employee performance and organisational objectives.

2. The People Metrics Definition Workshop for Operational Managers: Avoiding the Talent Management Lottery

Probably the second most common reason for poor correlations in the Four-Block People Analytics model is the use of inconsistent employee performance data. Inconsistent performance data is usually the result of managers not knowing what good performance looks like in their teams. This means that the company lacks an analytical basis for distinguishing between its high and low performers which turns the allocation of development, compensation and succession expenditures into a talent lottery rather than an analytically-based process.

By far the best (and easiest) way of transforming a performance management lottery into an analytical programme is the People Metrics Definition Workshop for Operational Managers.

This is simply a facilitated meeting for operational managers, where operational managers are guided through the People Metrics Definition Process. The key deliverables are:

- A set of people programme, competency, employee performance and organisational objectives metrics

- Increased engagement between operational managers and the data that they will be using to manage their teams. You simply cannot get operational managers to engage with HR processes and data if the model’s metrics definitions are provided by non-operational parties, such as external consultants or HR. Even if these external metrics are of high quality, operational managers will still tend to treat them as tick-box exercises because they do not believe (probably correctly) that non-operational parties can truly understand the business and its culture as well as they do.

The role of HR and/or external facilitators in the People Metrics Definition workshop is therefore not to provide content but to expertly facilitate the gathering of people metrics and helping managers reach a consensus.

When it comes down to the crunch, this workshop ultimately has an enormously positive impact on correlations within the Four-Block People Analytics model.

3. Restricted Range, Babies and Bathwater

Another problem that comes from not properly distinguishing between high and low performing employees is known as Restricted Range. Restricted range means that team member performance ratings tend to be clustered around the middle rather than using the full performance rating range. For example, the graph below shows a typical team performance distribution of a company using a 1 (poor performance) to 6 (high performance) rating scale. Note the number of ratings clustered around 4 and 5 instead of using the full 1 – 6 range:

There are many possible reasons for restricted range. Sometimes it’s because managers simply do not know what good performance looks like as discussed above. Another common cause is that in order to maintain team engagement and unity, they avoid low scores; on the flip side, they may avoid high scores so as to avoid feelings of favouritism.

Restricted range carries two serious implications:

- Restricted range not only restricts employee performance ratings, by definition, it also seriously restricts the possibility of decent Four-Block People Analytics Model correlations

- If everyone in a team has similar ratings, then managers must be using some other basis – some other scale even – for making promotions and salary decisions. Secret scales cannot be good for team morale or guiding employee development, compensation and succession planning investments.

Addressing restricted range is usually a cultural issue with causes that must be carefully understood before attempting intervention. One remedy usually involves explaining to managers that more differentiation between their high and low performers will result in the right team members getting the right development which in turn will result in higher team performance for the manager.

Perhaps this is the time to raise the thorny topic of employee ranking – such is the bad press of this, that employee rating/ranking is supposedly no longer used at companies such as Microsoft, GE and the Big 4 consultancies.

A little reflection reveals that avoiding employee performance ranking is a case of “throwing the baby out with the bathwater”, because if employees are really no longer measured, then on what basis are promotions, salary increases and development investments made? Presumably it means that the “real” employee performance measures have been pushed underground into secret management meetings and agendas where favouritism and discrimination cannot be detected. This cannot be good for employer brand.

If additional proof is required that employee ranking will always exist, consider what would happen in the event of a serious downturn and these companies were forced to lay off employees as was recently the case with Yahoo! If employees are not ranked in some way, then any layoffs would appear to be random and are a return to the talent management lottery scenario.

It must therefore be reasonable to assume that no matter what a company’s public relations department says, employee performance ranking still exists everywhere even if it has been temporarily pushed underground in the past few years. If even more proof is required, confidentially ask an operational manager, with whom you have a trusted relationship, who in their team has the greatest and least performance potential. Next ask them whose performance potential lies somewhere near the middle. What you’re doing here is in fact is getting the manager to articulate the “secret” scale that they use for salary increases and promotions.

An important role of people analytics professionals – and HR in general – is to contribute positively to an organisational culture where such scales are part of the analytics mainstream and not hidden in secret meetings. This ranking approach can be extended – and is indeed already used by many companies – by asking multiple managers to rank the employees and/or using a team 360.

The above ranking process will also prove useful to companies wishing to avoid legal accusations of random dismissal and layoff; Yahoo! for example would have benefited from this approach.

4. Technical reasons why data may not hang together

Finally, there are some technical statistical reasons why the Four-Block People Analytics Model data may not correlate, such as:

- The data set may not be large enough (e.g. you need a lot of data for proper analysis)

- If you’re using non-machine learning/parametric techniques, the data may not be sufficiently normally distributed and/or may not be linear. This is another good reason for companies to consider migrating to the use of machine learning techniques.

5. Take Away

Poor correlations in the Four-Block People Analytics Model are a stark reminder that people analytics data needs to be collected with specific business objective outcomes in mind. Using any other form of data ultimately results only in wasted time and resources. This approach must be one that involves operational managers – who are each critical to the defining of metrics to be used. Only then can people analytics truly deliver on all that it promises.

Please do share your comments, thoughts, successes and failures with me below or at ![]() .

.

©Blumberg Partnership 2016

With thanks to Tracey Smith of Numerical Insights who kindly reviewed this article.

People analytics: how much should you spend on technology?

Can significant investment in expensive people analytics technology ever be justified?

Compare people analytics to marketing analytics: in the case of marketing analytics, the case for expensive technology is reasonably clear because sample sizes have orders of magnitude in the hundreds of thousands. On that scale, high-tech data fishing will usually pay for itself with the discovery of a few new profitable market segmentations.

In the case of people analytics, however, few global workforces are sufficiently large to justify these expensive fishing expeditions in the hope of finding valuable workforce patterns. And even if patterns are found, the ROI would be virtually swallowed up by the cost of the technology required to generate it.

Experience suggests that when it comes to people analytics, optimal ROIs are generated not by expensive data fishing, but by focusing on specific well-defined problems identified by the business. The solutions to most of these problems do not require significant technology investments and can instead be solved with low-cost packages like SPSS and cost-effective cloud technologies. In truth, we’ve even helped companies save $10m using just Excel as part of a well-planned structured data methodology. I’ll post something on people data strategies in the next few days.

The bottom line is that ROIs on people analytics could be even higher if companies avoid unnecessary capital outlays on excessive technology.

Do Competency Frameworks Work in Real-World Organisations?

Introduction

“Do Competency Frameworks Work in Real-World Organisations?”

This question about competency frameworks, psychometric tests and 360° surveys etc. is regularly posed in various forms on LinkedIn analytics groups, and inevitably generates a lot of debate. I’d like to discuss it here in the context of the phrase ‘real-world’ via a case-study.

The term “real world” reflects the perception of many senior and operational managers that while competency frameworks and psychometric tests may be effective in the controlled academic environments in which they are created and developed, they may be less effective in their ‘real-world’ organisations. Could there be any truth to their concerns?

Analysts and businesspeople define “effective” differently

To answer this, we need to delve more deeply into the meaning of the term “effective” in this context. Without getting overly academic, analytics practitioners usually consider a framework to be “effective” if it has sufficiently high construct validity in that it accurately measures the construct it claims to measure such as competency, ability, or personality say. Now contrast this with operational managers for whom the term “effective” usually refers to the predictive (or concurrent) validity of the measure; that is, the extent to which organisational investments in employee frameworks predict desirable employee outcomes such as job performance, retention and ultimately profitability.

And herein lies the rub: just because a framework accurately measures the construct it purports to measure (e.g. competency or ability), this construct validity does not mean that the instrument predicts important employee outcomes. It will therefore not necessarily deliver metrics that can be used as useful inputs to create talent programmes specifically designed to maximise performance and retention of high potentials.

How to establish the validity of a framework

So how do you establish the validity of a competency framework or 360° survey etc.?

- You first test concurrent validity on a representative sample of your employees to determine which competencies correlate with desired employee outcomes in the ‘real world’ i.e. in your organisation (as opposed to wherever the vendor claims to have tested it).

- You then refine the framework based on what you learn from these correlations.

- If the potential cost of framework failure is particularly high, you deploy a methodology to test causality/predictive validity.

A case study

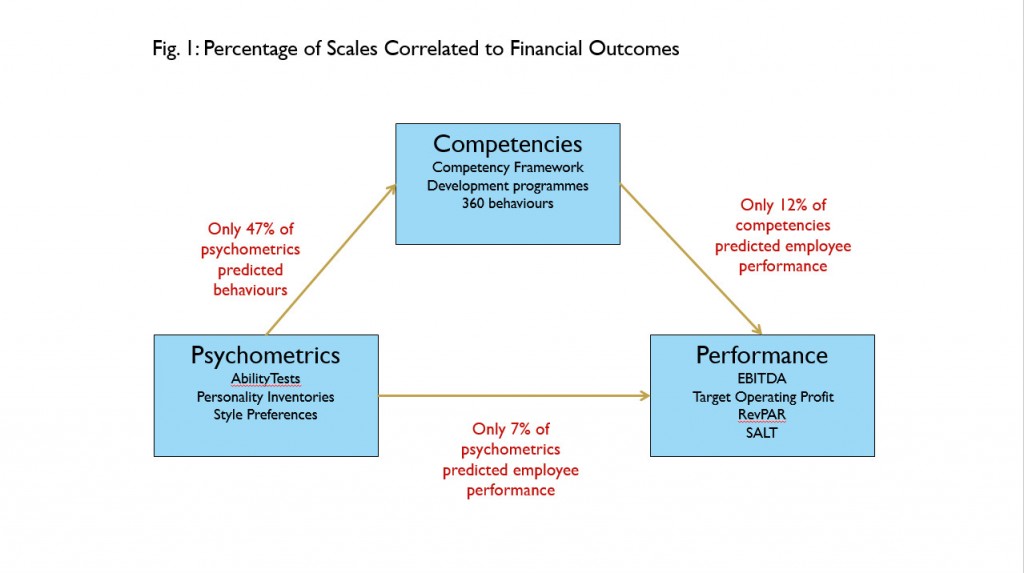

Sadly, few organisations do such testing before procuring frameworks which can lead to unfortunate results. As an illustration of this, we recently examined trait and learnable competencies data for 250 management employees in the same role at a well-known global blue chip brand, together with their job performance scores (Fig 1).

We modelled them as:

- Psychometric scores: Fixed characteristics unlikely to change over time and therefore potentially useful for selection if they correlated with performance

- Competencies: Learnable behaviours as assessed by the company’s competency framework, 360° survey and development programme assessors. Useful for developing high performance.

- Employee Performance: We were fortunate to obtain financial performance data for each manager which means these scales were reasonably objective. Ultimately, the reason for investing in psychometrics and competencies for selection and development is to achieve high performance here.

We found the following relationships in the data:

- Psychometric and competency scores: As can be seen, only 47% of psychometric test scales correlated with learnable competencies. Effect sizes were small, typically less than 0.20 meaning that they would not be helpful for selecting job candidates likely to

exhibit the competencies valued by the organisation. One psychometric test, however, did have a large correlation with performance; unfortunately this correlation was negative meaning that the higher the job candidates’ test scores, the lower their competency scores – hardly what the company intended when it bought this test. - Psychometric scores and Employee Performance: Only 7% of the psychometric test scales correlated with employee performance and again effect sizes were typically less than 0.20 – meaning that the psychometric tests were not useful for selection. Again, one test shared a negative relationship with performance: this means that if high scores were used for candidate selection, it is likely to select low performers.

- Competencies and Employee Performance: Only 12% of the learnable competency scales correlated with performance – and again, effect sizes were small meaning that investing in these competencies to drive development programmes was not worthwhile. And once more, some of these competencies had negative correlations with performance, meaning that developing them might actually decrease employee performance.

Conclusion

So, to go back to the original question: Do competency frameworks and psychometrics work in real-world organisations? One could hardly blame managers in the above organisation for being somewhat sceptical. This scenario is not unusual and is typical of the results we find when auditing the effectiveness of companies’ investments into competency frameworks and other employee performance measures.

But does it have to be this way? I believe that the answer to this is no, and that frameworks can not only work, but can significantly improve performance in the ‘real world’.

All that is needed is some upfront analysis to obtain data such as I have outlined above – as that is what is needed to determine whether performance is being properly measured as well as which scales are and are not useful (so that poor scales can be removed and if necessary replaced with better predictors/correlates). Typically, we see employee performance improvements of 20% to 40% by simply following this process.

So the answer to the original question is ultimately a qualified “yes” – competency and employee frameworks can work provided that systematic rigour is used in selecting instruments for ‘real world’ applications.

How to get value from competency frameworks and psychometric tests

- Frameworks cannot predict high performance if you don’t know what good looks like. The first step in procuring frameworks is therefore to ensure that “high performance” is clearly defined and measurable in relation to your roles. And if you want to appeal to your customers – operational managers – use operational performance outcomes.

- Before investing in a framework that will potentially have a negative effect on your workforces’ performance, ensure you get an independent analytics firm’s objective evaluation of each scale’s concurrent and/or predictive capabilities in respect of employee populations similar to your own. If the vendor you’re considering purchasing from cannot provide independent evidence, request a discount or get expert advice of your own before purchasing anything.

- If your workforces are larger than around 250 employees, you should certainly get expert advice to ensure that a proposed framework does indeed correlate with or predict performance in your own organisation before making a purchase.

- If you need professional help interpreting quantitative evidence provided by vendors, get it from an independent analyst – never from a statistician working for the vendor trying to sell you the framework.

People Analytics: Who’s fooling who?

Participants at last week’s TMA Conference asked me to repost this blog: please find here as requested.

Introduction

I’m going to argue here that many organisations using people analytics to improve their workforce programmes are fooling themselves.

Let me explain: evidence-based people analytics relies on a model something like this:

HR programme –> Competencies –> Employee performance –> Org performance

That is, you invest in workforce programmes to increase employee competencies (“the how”) which in turn delivers increased employee and organisational performance (“the what”).

The role of people analytics is to calculate whether your people programmes do in fact raise employee competencies and performance. If the analytics shows that your programmes are not improving performance, it provides guidelines on how to fine-tune them so that they do.

Faulty competency and performance management frameworks

Over the past 15 years, I’ve asked many conference and workshop audiences the following questions about their performance management and competency frameworks:

1. Performance management: To what extent do you believe in your organisation’s performance ratings as measured say by your annual performance review? Do they objectively reflect your real behaviour, and are they a fair unbiased basis for your next promotion and salary increase (as opposed to your manager promoting whoever they feel like promoting)?

2. Competency management: To what extent do you believe that your organisation’s competency framework accurately captures the competencies required for high employee performance in your organisation?

By far the vast majority of audiences tell me that they believe in neither their competency nor performance management frameworks because, for example:

1. Competency frameworks: In most cases, the competency framework was brought in from outside and not created by managers who understand the real competencies required for high performance in their particular organisational culture. Thus their managers don’t believe in their competency framework and use it as a tick-box exercise. Furthermore, since the framework covers multiple job families, it is unlikely to predict performance across different job families e.g. is it really likely that salespeople and accountants require the same competencies for high performance? Research shows that these lists should contain very different competencies; yet most organisations use a “one size fits all” possibly with minor adjustments.

2. Performance management: Performance ratings are ultimately the subjective view of an all too human line manager. What chance then do employees have of an unbiased performance rating? (Vodafone is a notable exception here where evidence for performance ratings are verified by multiple people). Furthermore, many organisations use forced performance distributions meaning that only so many people can be high performers. Who can blame high performers for not believing in their performance management system or their chances of promotion when the forced distribution says “sorry but the top bucket is already full”?

GIGO: Garbage In, Garbage Out

So here’s the problem: if like most people you don’t believe in your organisation’s competency and performance management frameworks, then you certainly aren’t in a position to believe in the results of statistical analysis based on data generated by these frameworks. As the old acronym GIGO says, Garbage In, Garbage Out.

What is the solution? I’ll cover this in next week’s blog but as an interim taster:

1. Stop doing people analytics until you’ve fixed your frameworks. You’re wasting valuable resources on analysis based on data you don’t even believe in (and putting the data into an expensive database does not make the data any more valid)

2. Your need to design your own competency frameworks – one for each focal role. When I say “you”, I mean that this needs to be done by your line managers (if you want them to believe in it) and facilitated by you as HR e.g. using repertory grids

3. If you accept that managers are human and that any performance ratings will therefore always be subjective, find ways to minimise the impact of subjectivity by using multiple raters/rankers.

More on this next time.

The greatest impediment to building a people analytics function

People often debate the greatest impediments to building an people analytics function. Typical answers include lack of technical skills, lack of data, lack of senior management support, an organisational culture that doesn’t view employee behaviours as quantifiable, lack of funding, and so on.

While all of these contain some truth in my experience, the list lacks the number one barrier, so well expressed by Chris as his key takeaway after this week’s CIPD analytics workshop: “The obvious yet easily overlooked concept of finding the business problem first then working back from there”

So what do we mean by first finding a business problem and starting from there? The first thing most analysts learn is that analysing without first proposing a model is usually a waste of time because without a model, you may end up finding relationships that exist by chance but are in fact false. What is a model? Here’s an example:

Programme £ -> Competencies (how) -> Performance (what) -> Organisational objectives

This model says that:

1. You spend money on a people programme e.g. development, compensation, recruitment, employee relations, and so on

2. The only reason you spend this money is to increase the available pool of organisational competencies (otherwise known as human capital i.e. human capital is about competencies; human resources is about headcounts)

3. The only reason you want to increase the organisational competencies is to improve employee performance

4. The only reason you want to increase employee performance is to increase the achievement of organisational objectives

In other words, the model says we only spend money on people programmes to increase achievement of organisational objectives. (Side implication: So any programme that doesn’t result in the competencies which contribute to organisational objectives is not helping the organisation to achieve its objectives).

Now this model, like any model, has limitations e.g.

1. Some people argue we spend money on people programmes for reasons other than achieving organisational objectives e.g. we spend it as part of a corporate social responsibility to our employees (but try explaining that one to investors in public companies)

2. This model includes factors like “employee engagement” and “manager behaviour” as “competencies” (but this is just semantics – you come up with a better name than “competencies” for that box: some people refer to it as the “how” we get things done)

3. It’s difficult to prove directional causality e.g. how do we know that performance doesn’t “cause” a “competency” like engagement?

4. How do you measure competencies? Presumably via your competency framework; if you don’t have one, then use a Repertory Grid to develop one

5. How do you measure performance? Chances are you have a performance management framework so you could use that as a starter. If you don’t trust it, then this model gives you a good reason to fix it.

6. How do you measure achievement of organisational objectives for employees without P&L/budget responsibility? Answer: you’ll get tons of value by focusing on competencies and performance management for the first year or three; you can always come back to this afterwards.

But despite limitations like these, the model has two major benefits:

1. It translates directly from model to Excel spreadsheet (one row per employee, one column for each block in the model)

2. More importantly, it forces people to think about how every penny of HR money will result in the achievement of some organisational objective. As Doug Bailey, Unilever HRD said at a Valuing Your Talent event last month, “Whenever someone requests funding for an HR programme, I ask them how this will help us sell another box of washing powder”.

So how does all this link to Chris’s original comment about finding the business problem first? It means that unless an analytics initiative is helping to fix some unachieved organisational objective, it is worthless. Thus the rule is:

The only way to start any analytics programme is by first finding an important organisational objective that is not being achieved (preferably one that most of the board agree is a problem – that way you’re more likely to get a budget for analytics)

So the bottom line is that if you’re achieving all your organisational objectives, you don’t need analytics. For the rest of us, chances are there’ll be some organisational objective not being achieved.

Let me hasten to add that organisational objectives are people-related objectives that appear in your corporate/organisational business strategy like “reduce the number of people in the population with disease X” or “achieve revenues of £X”. But more importantly, organisational objectives are not HR objectives like “employee engagement” or “employee churn” or “absence rates”. In the above model, those would count only as Competency or Performance measures. (In other words, don’t confuse organisational objectives with HR objectives).

So after reading this, you might say, this is all so obvious – I mean how else could you start an analytics project? I’d say that 90% of the calls I get start with (and I’m sure other analytics consultants agree with me): “Our HR function has collected a lot of data over the past few years. Can you please help us do something with it?”. I say “Like what?” The reply is usually: “Help us to make HR look good”. I suggest that the best way to make HR look good is by enabling (helping) the business to achieve its organisational objectives. And the only way it will do that is by starting with the business problem and not with the data.

In fact starting with the data is like saying “We’ve got all these motor spares lying around; can you help us build a car with them?”. What are the chances you’ll have all the right spares to build a car? If your “organisational objective” or problem is to build a car, you’d surely first determine what parts you need. Then by all means do an audit of what parts you have lying around. Chances are you’ll find you only have 5% of the parts you need to achieve your objective; for the rest, you’ll have to go out and obtain the other 95% of parts you need. It’s exactly the same with data for analytics: for any given problem with achieving an organisational objective, you probably only have 5% of the data you need to solve it.

So there you have it: that’s why Chris felt that his biggest insight from the workshop was: “The obvious yet easily overlooked concept of finding the business problem first then working back from there”. And not gaining that insight is really the biggest impediment to creating an effective people analytics function.